As per the research of BrightEdge, 68% of users begin their digital journey from a search platform. These figures clearly indicate that a position on the first page of search results can bring a lot of visitors.

You can comfortably get a better ROI if your site ranks first in search results. Although this is easier said than done.

You have to think about a lot of things like off-page SEO, on-page SEO, the quality of your content, and technical SEO.

A successful SEO plan will consist of every one of these tactics plus the usual improvements to make sure you get constant results.

So, in this article, I’ll explain what you should check when performing a technical SEO audit.

What is a technical SEO audit?

A Technical SEO audit or a technical audit is a procedure to evaluate different aspects of a site to ensure that all SEO guidelines are followed. These include elements that are linked straight to the ranking factors of search platforms.

For example, technical SEO includes HTML components, meta tags, the code of your site, CSS, and JavaScript.

A technical SEO audit checklist involves evaluating these elements of your site. By doing so, you can note down and fix the problems your site is currently having, ensuring that it is search engine friendly.

Steps to perform a technical SEO audit

A technical SEO audit involves checking and optimizing many different things. It feels like you have a lot to do, but that’s not the case. You need to concentrate on one thing at a time. So, with that in mind, let’s dive in!

Indexation and crawlability

First, you have to ensure that search platforms can correctly crawl and index your site. You can do so by evaluating a few things:

- Sitemap

- Subdomain

- Robot.txt

On top of that, you’ll also have to ensure that you’re using canonical and meta tags correctly on your web pages.

Sitemap

A sitemap is an HTML or XML file that contains detail regarding the web pages, images, videos, and other files of your website. Search platforms can crawl your website effectively by understanding this file.

Sitemaps can also specify which web pages and files are crucial on your website and offer useful details regarding these.

Sitemaps are of two types: XML and HTML.

- An XML sitemap is just for the search platforms to use. It helps the search platform bot to effectively crawl your site.

- An HTML sitemap allows your visitors to read and know about your website’s structure and look for web pages efficiently.

You must include all the pages you want a search engine to index in your XML sitemap. If you see that your web pages are not properly crawled or indexed, check your sitemap to ensure that it is accurate and updated.

To locate the XML sitemap of your site, you have to type:

https://example.com/sitemap.xml

If you can’t find your sitemap this way, you can utilize the Google site operator function. Just type any of these search functions:

- site:domain.com filetype:xml

- site:domain.com inurl:sitemap

- site:domain.com ext:xml

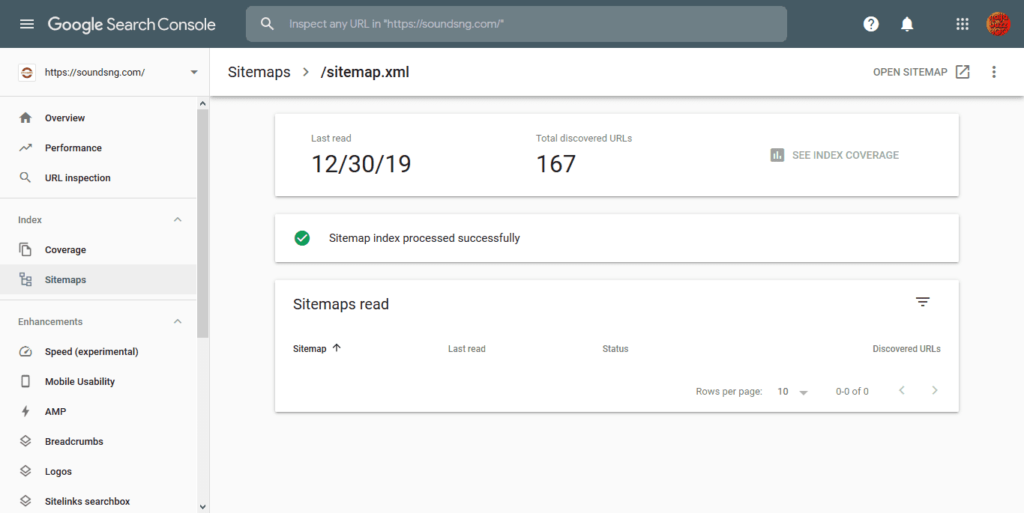

You must also upload a sitemap to Google Search Console for easy crawling and indexing. Once a valid sitemap is uploaded, you should see success under the status column. If you see “has errors” or “couldn’t fetch” status, it means there was a problem with your sitemap.

Subdomains

Here you’re checking your subdomains and the number of indexed web pages under each subdomain. Once again, you’ll use the site: operator command and type the following in Google:

site:example.com www

You’ll see all the indexed web pages under subdomains. Then you have to see whether any web pages are copies of the ones present in your root domain (example.com).

Robots.txt

As the name suggests, robots.txt is a text file that assists you in blocking a section or number of web pages of your website from getting crawled by search engine bots. It utilizes the disallow function to stop the bots from crawling the specified pages.

To find your robots.txt file, type the following:

https://exmaple.com/robots.txt

Once you find the robots.txt file, make sure that it accomplishes these three tasks:

- Direct search platforms crawlers away from sensitive files or folders

- Stop crawlers from overloading the server’s assets

- Tell crawlers where to find the sitemap

Review your file and ensure that the disallow commands are used properly.

Addressing issues

The crawling and indexing problems that you discover in your technical SEO audit may belong to one of the following types based on your proficiency:

- Problems you can solve yourself

- Problems that require you to get assistance from a creator or an admin

You can solve the problems linked to your website’s structure on your own. But it is better to hire an expert if you are new or lack the required knowledge to save time and energy.

Site structure

Website architecture shows how the pages of your site are arranged.

A great website design consists of topic clusters and pillar content. It also ensures that pages on your site are easily accessible to your visitors with the least amount of clicks.

It should be sensible, and you can effectively extend it as your site develops. Thoughtful site architecture should consist of the following:

- It should only take 2-3 clicks to go to any page from your homepage

- Navigation is sensible and enhances the UX

- Content and webpages on similar topics are segmented together thoughtfully

- All the URLs are short and include a slug to describe what the page is about

- Every web page of your site displays breadcrumbs, to let your visitors know how they reached the current web page

- Internal linking to allow visitors to explore your website naturally

It is difficult to browse a website that has a bad structure. But, when a site’s well designed and includes all the right components, it helps not just your visitors but your SEO initiatives as well.

Site hierarchy

If a visitor has to click 10 times to go to a web page from your site’s homepage, that means your website has a very in-depth hierarchy. Webpages that are at the bottom of the hierarchy are thought to be low-value by search platforms.

Perform an audit to cluster web pages according to keywords and shorten the hierarchy. By doing so, you’ll transform URL architecture and improve the way visitors explore your site.

Image Source: Bluehost

URL structure

Similar to your site, the URL architecture should be the same throughout and not be difficult to understand. For instance, if a user is utilizing breadcrumb navigation for men’s shirts, the structure should be like this:

Homepage > Clothing > Men > Shirt

The URL address has to show the exact same structure:

example.com/clothing/men/shirt

If a URL is not matching the navigation structure, it is a sign of poor URL architecture.

Internal linking

Next, you should look at your site’s linking profile. When you optimize your website’s architecture and increase convenience for search platforms and visitors, you have to monitor the quality of internal linking within your website.

Internal links majorly have two forms:

- Guiding links: Mostly located in the header or footer of your site

- In-context links: Used in the text of a webpage

Internal links in your website should utilize proper anchor text and tell readers precisely what to assume when they visit the linked URL. Remember that what is useful for visitors is most likely also useful for search platform crawlers.

You can use Google Analytics to analyze web pages that have an internal linking problem still showing up on search results.

Look for web pages that have low traffic even though they have good content, and try to link them to some of your other pages.

Ensure that whenever you’re publishing a new article, it contains internal links to other pages on your site. By doing this, you’ll lower the chances of having to deal with internal links again.

Site security

Information shared using an HTTP is not secured, which implies that a third-party intruder might obtain these details. That’s why your site visitors must not be prompted to input bank or personal details using HTTP.

How to solve this problem? The answer is to migrate your site to a protected server on HTTPS that utilizes an encrypted certificate known as SSL. SSL is offered by certified providers to ensure the authenticity of any website.

When you use HTTPS, it displays a lock icon alongside your site’s address.

HTTPS uses the transport layer security to make sure that the information is not manipulated once transmitted.

Site speed

The loading speed of your site clearly affects the UX, and that’s why it is one of the ranking factors for search platforms.

In a technical audit, you have to analyze two important things:

- Webpage loading: The amount of time it takes to render one page

- The average speed of your website: How long it takes in general for a group of pages to load

If you improve the web page speed, your website’s speed will increase automatically.

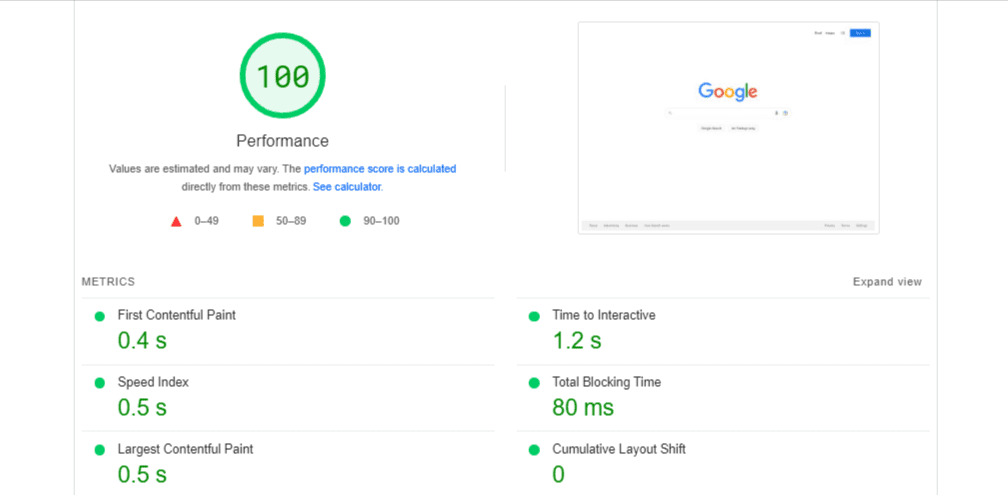

Increasing speed is so crucial that Google has particularly created a program that gives a score out of 100 to the site you entered depending on indicators, including webpage loading time.

Core Web Vitals extension can also help in identifying your site’s speed. This extension evaluates web pages depending on three indicators that describe the UX:

- Largest Contentful Paint (LCP)

- First Input Delay (FID)

- Cumulative Layout Shift (CLS)

To optimize your webpage loading speed, you have to start working on the images.

Images are the main reason web pages take a long time to load. That’s why you must try to lower their size but make sure that the quality isn’t compromised.

Next, you have to concentrate on CSS and JavaScript improvements. Implement minification, which deletes extra whitespace to enhance JavaScript and CSS. If you like to improve the speed more, try to:

- Utilize browser caching

- Lower the size of media files like gifs or videos

- Select a hosting company that can handle the size of your site

- Utilize a Content Delivery Network

Problems with identical content

If your site has duplicate or almost duplicate content, it could cause multiple issues like:

- The wrong version of the web page might be shown in search results

- Search platforms might not understand which page should be emphasized, leading to indexing problems

Conduct a content audit of your website to ensure that you don’t have identical content problems. You can also utilize the pillar pages with topic groups to develop ordered content that increases your website’s credibility.

Different versions of a page

Among the most frequent causes of identical content is if your site contains multiple versions of a page. For instance, a website might have an HTTPS, an HTTP, a www, and a version without the www part. The solution is:

- Use the canonical tag to specify the original version of a webpage

- Create 301 redirects from HTTP to HTTPS webpages

Redirect issues

Webpage loading and server problems impact the UX and might cause problems if a search engine crawls your website. Below are some common redirect error types:

300s redirect errors

These issues imply that when visitors and search platforms arrive at the current webpage, they are diverted to a different webpage. Some of the common errors include:

- 301 – in this case, the old page is either moved or deleted, and the visitors are directed to either a newer version or a different page. It is used for permanent redirects.

- 302 – This code implies a temporary redirect, and it can be used if you’re, for instance, trying out a different web page layout

- 307 – This is a temporary redirect as well, but indicates a switching of protocol between the source and the destination.

400s redirect errors

These issues show that the webpage you are visiting is unavailable. Frequent 400s issues are:

- 403 – Prohibited access, this typically indicates that login is necessary

- 404 – The page does not exist or is not found

- 410 – The page is deleted permanently

- 429 – The server is flooded with a large number of requests in a short period

Meta tags

A meta tag provides more information regarding a page’s content to the search platform crawler.

These are incorporated into your webpage’s HTML code. And there are the following kinds of meta tags that you must know about:

- Title tags

- Meta descriptions

- Robot meta tags

- Viewport meta tags

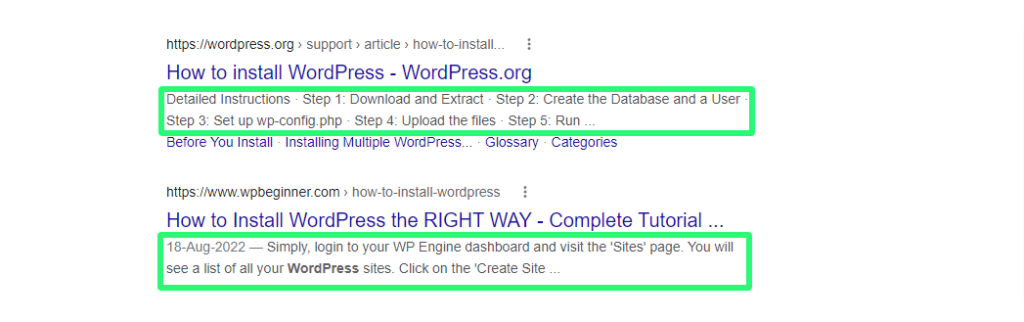

The meta description is the information you see below the title on the search engine results. It is not related to the ranking factors, but it allows users to know what to expect when they enter a website on search engine results.

A robot meta tag is incorporated in the code of your site and gives commands to the search platform crawlers about indexing, noindexing, follow, nofollow, etc.

The viewport tag changes the size and orientations of a webpage based on the visitors’ device type. Although you need to have a responsive design for this work.

Schema and structured data

The last thing you have to check in your technical SEO audit is the schema.

In your web page’s code, utilizing certain tags and microdata will help search platforms better understand the content of the page. These tags help search platforms properly crawl and index web pages.

Schema is a standard set of markup language that website developers utilize to let search platforms create content-rich results for a page on search results.

For pages you believe can be shown as enriched and interactive search engine results, you can utilize schema makeup to contest for this place.

The purpose of employing structured data is to assist search platforms in correctly reading the information on your website, which can lead to your website being displayed in enriched results.

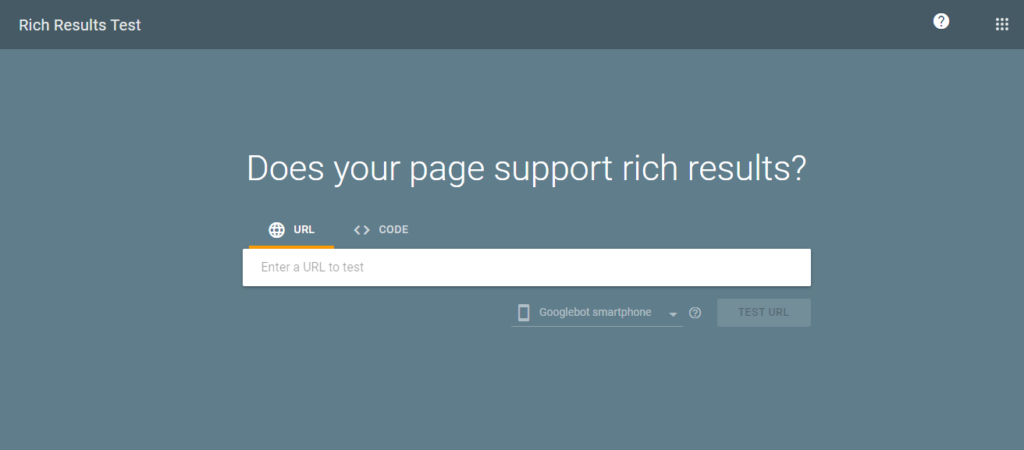

If you choose to compete for a rich search result, utilize Google’s Rich Results Test to test the code on your website.

If your code is clear and accurate, identifying technical SEO problems is a lot quicker and significantly less stressful.

Conclusion

There are three kinds of SEO, with technical SEO being the most essential one. It makes no difference how good your on-page or off-page SEO is if your site is lacking the fundamental technical requirements.

When performing a technical SEO audit, there will always be numerous issues to tackle. So do not be intimidated by that fact.

The most essential thing is to determine your objectives. Begin with the important issues and work your way down by focusing on one thing at a time.